Web UI

A local web dashboard for visual search, document browsing, editing, and AI-powered answers.

gno serve

# Open http://localhost:3000

Overview

The GNO Web UI provides a complete graphical interface to your local knowledge index. Create, edit, search, and manage your documents, all running on your machine with no cloud dependencies.

| Page | Purpose |

|---|---|

| Dashboard | Index stats, collections, quick capture |

| Search | BM25/vector/hybrid + advanced retrieval controls and tag facets |

| Browse | Paginated documents with collection and date-field sorting |

| Doc View | View document with edit/delete actions and tag editing |

| Editor | Split-view markdown editor with live preview |

| Collections | Add, remove, and re-index collections |

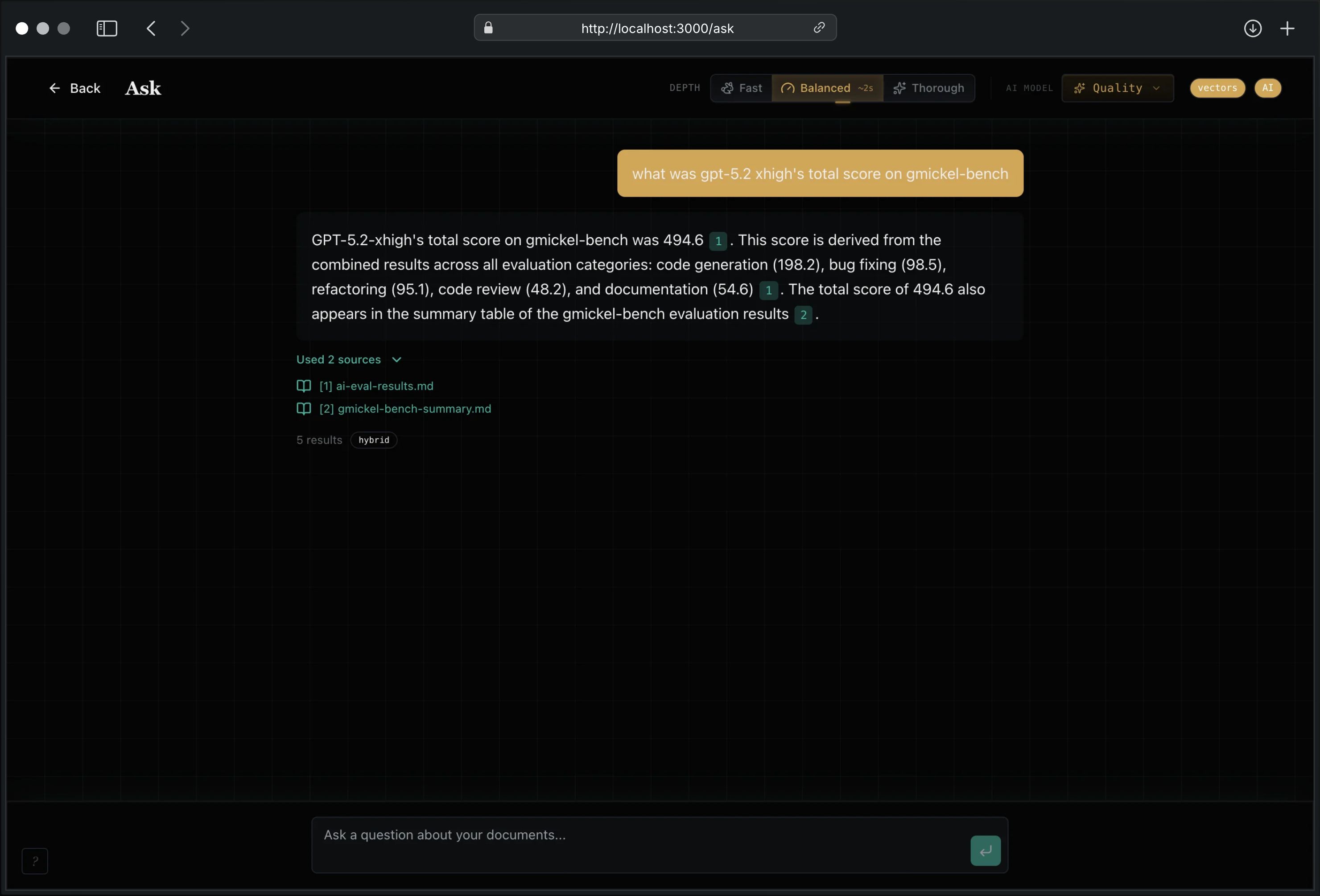

| Ask | AI-powered Q&A with citations |

| Graph | Interactive knowledge graph visualization |

Quick Start

1. Start the Server

gno serve # Default port 3000

gno serve --port 8080 # Custom port

gno serve --index research # Use named index

2. Open Your Browser

Navigate to http://localhost:3000. The dashboard shows:

- Document count: Total indexed documents

- Chunk count: Text segments for search

- Collections: Click to browse by source

- Quick Capture: Create new notes instantly

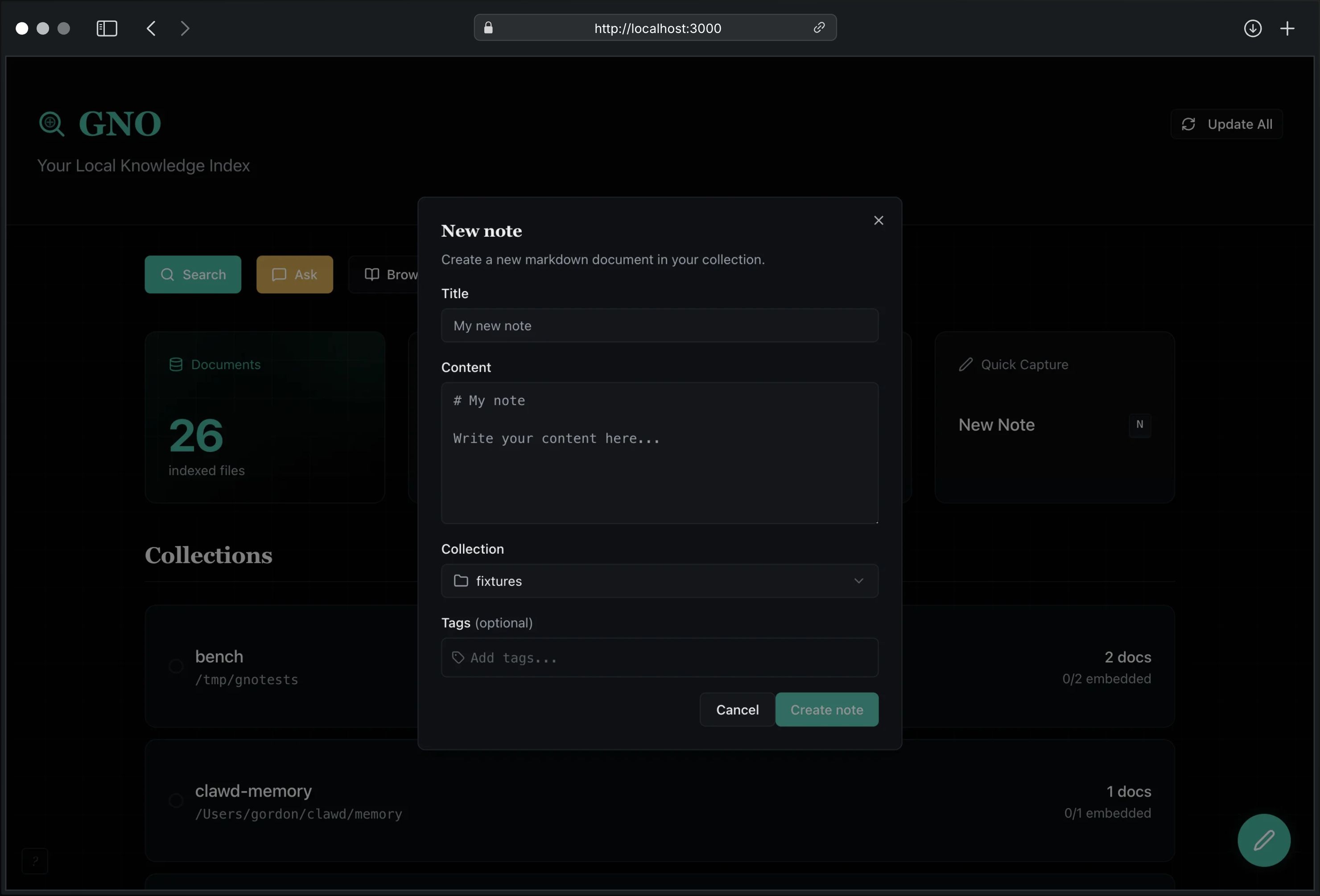

3. Create a Note

Press N (or click the floating + button) to open Quick Capture:

- Enter a title

- Write your content (markdown supported)

- Select a collection

- Add tags (optional, with autocomplete)

- Click Create note

The document is saved to disk and indexed automatically.

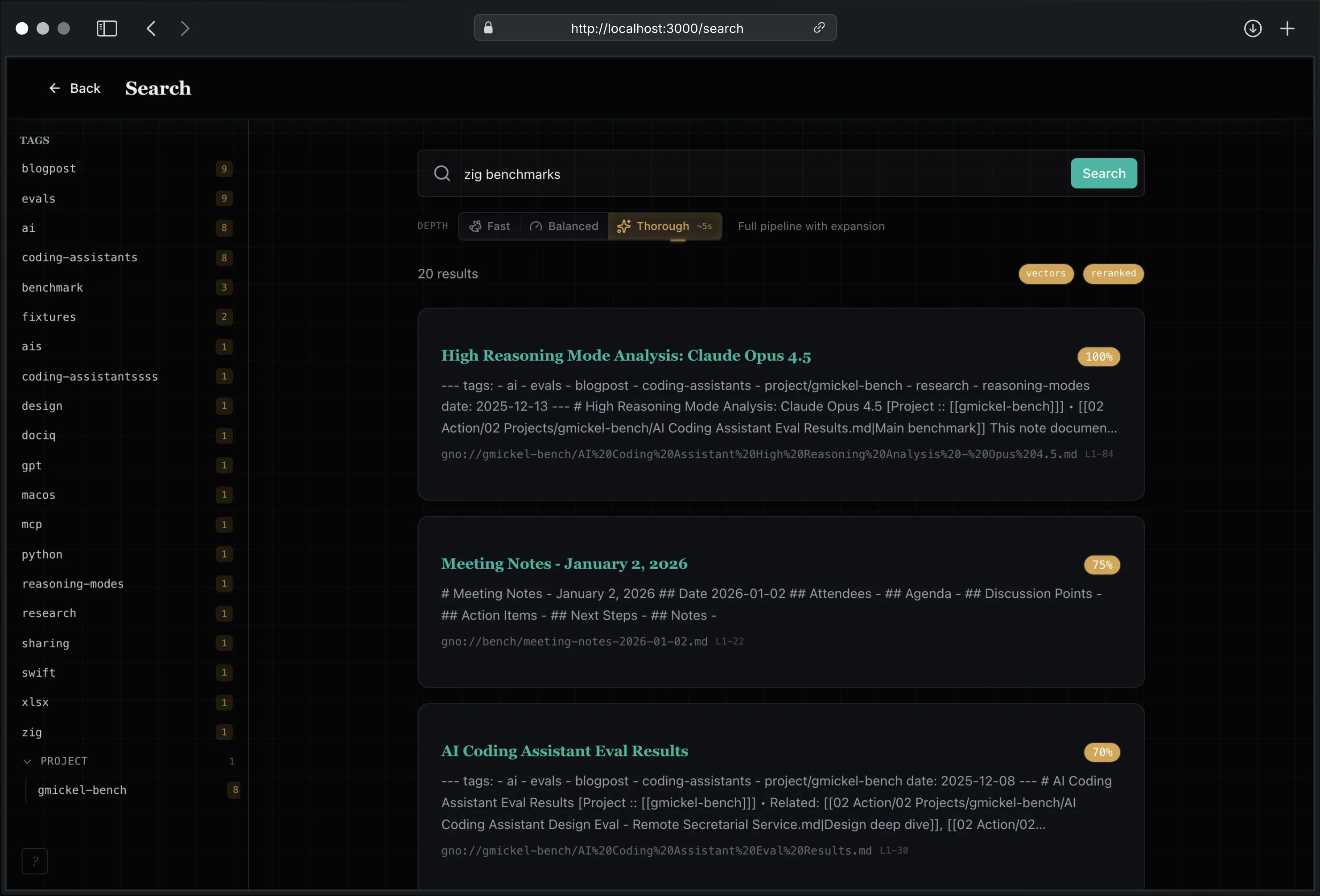

4. Search

Click Search or press /. Choose your mode, then open Advanced Retrieval for:

- Collection filter

- Date range (

since/until) - Category + author filters

- Tag match mode (

any/all) - Query modes (

term,intent,hyde)

Choose retrieval mode:

| Mode | Description |

|---|---|

| BM25 | Exact keyword matching |

| Vector | Semantic similarity |

| Hybrid | Best of both (recommended) |

5. Ask Questions

Click Ask for AI-powered answers. Use Advanced Retrieval to scope by collection/date/category/author/tags, then ask your question.

Note: Models auto-download on first use. Cold start can take longer on first launch while local models download. For instant startup, set

GNO_NO_AUTO_DOWNLOAD=1and download explicitly withgno models pull.

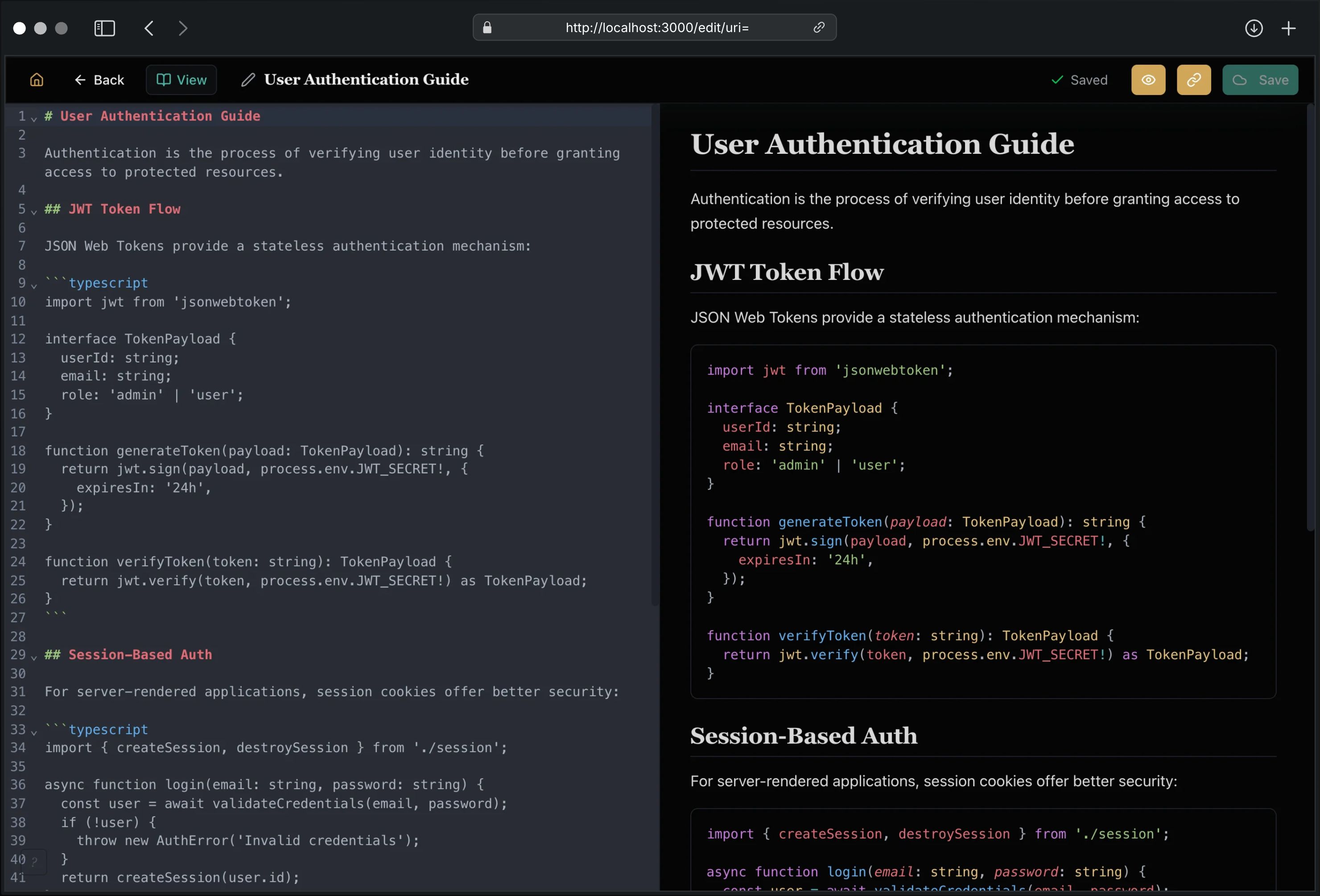

Document Editing

Editor Features

The split-view editor provides:

| Feature | Description |

|---|---|

| CodeMirror 6 | Modern editor with markdown syntax support |

| Live Preview | Side-by-side markdown rendering |

| Auto-save | 2-second debounced saves |

| Syntax Highlight | Code blocks with Shiki highlighting |

| Unsaved Warning | Confirmation dialog before losing changes |

| Toggle Preview | Show/hide preview pane |

Keyboard Shortcuts

Press ? to view all shortcuts. Single-key shortcuts (no modifier needed) work anywhere outside text inputs, like GitHub and Gmail.

Global Shortcuts

| Shortcut | Action |

|---|---|

| N | New note |

| / | Focus search |

| T | Cycle search depth |

| ? | Show help |

| Esc | Close modal |

Editor Shortcuts

| Shortcut | Action |

|---|---|

| Ctrl+S | Save immediately |

| Ctrl+B | Bold selection |

| Ctrl+I | Italic selection |

| Ctrl+K | Insert link |

| Escape | Close editor |

Opening the Editor

From any document view, click Edit to open the split-view editor. Changes are auto-saved after 2 seconds of inactivity.

Creating Documents

Use Quick Capture (N) for new notes:

- Enter a title (generates filename automatically)

- Write content in markdown

- Select target collection

- Click Create note

The file is written to the collection’s folder and indexed immediately.

Deleting Documents

From document view, click the trash icon. This:

- Removes the document from the search index

- Does NOT delete the file from disk

- Document may re-appear on next sync unless excluded

Collections Management

Collections Page

View and manage your document collections:

- Document count: Files indexed

- Chunk count: Text segments created

- Embedded %: Vector embedding progress

- Re-index: Update collection index

- Remove: Delete collection from config

Adding Collections

Click Add Collection and provide:

- Path: Folder path (e.g.,

~/Documents/notes) - Name: Optional (defaults to folder name)

- Pattern: Glob pattern (e.g.,

**/*.md)

The collection is added to config and indexed immediately.

Removing Collections

Click the menu (⋮) on any collection card and select Remove. This:

- Removes collection from configuration

- Keeps indexed documents in database

- Documents won’t appear in future syncs

Features

Model Presets

Switch between model presets without restarting:

- Click the preset selector (top-right of header)

- Choose: Slim (default), Balanced, or Quality (best answers)

- GNO reloads models automatically

| Preset | Disk | Best For |

|---|---|---|

| Slim | ~1GB | Fast, good quality (default) |

| Balanced | ~2GB | Slightly larger model |

| Quality | ~2.5GB | Best answer quality |

Model Download

If models aren’t downloaded, the preset selector shows a warning icon. Download directly from the UI:

- Click the preset selector

- Click Download Models button

- Watch progress bar as models download

- Capabilities auto-enable when complete

Indexing Progress

When syncing or adding collections, a progress indicator shows:

- Current phase (scanning, parsing, chunking, embedding)

- Files processed

- Elapsed time

Search Modes

The Search page offers three retrieval modes:

BM25: Traditional keyword search. Best for exact phrases, code identifiers, known terms.

Vector: Semantic similarity search. Best for concepts, natural language questions, finding related content.

Hybrid: Combines BM25 + vector with RRF fusion and optional reranking. Best accuracy for most queries.

Advanced retrieval panel adds structured controls:

- Collection: target one source or search all

- Date range: explicit lower/upper document date filters

- Category/author: frontmatter metadata filters

- Tag mode: switch between tag OR (

any) and tag AND (all) - Query modes: inject

term,intent, orhydeentries for structured hybrid expansion

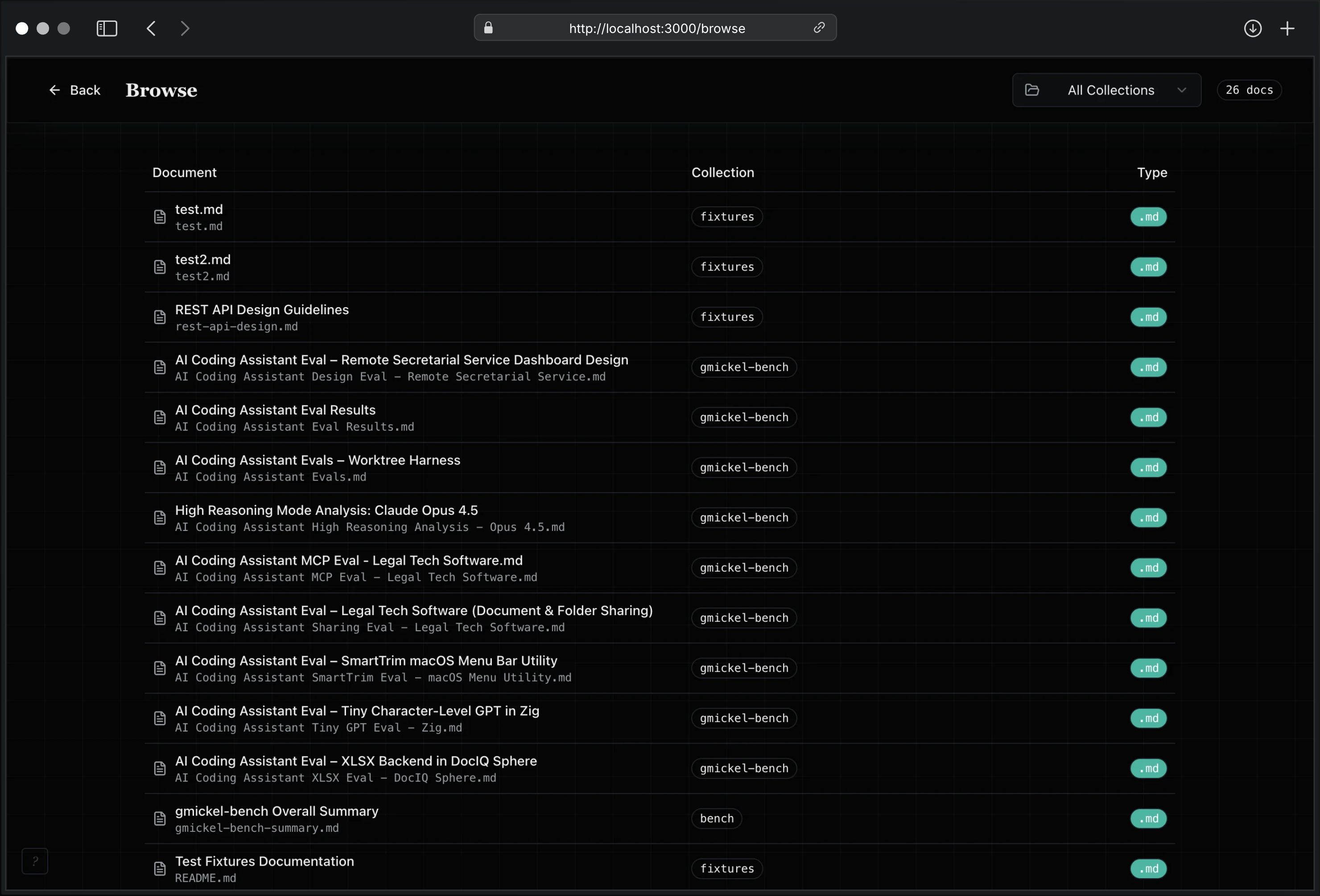

Document Browser

Browse all indexed documents:

- Filter by collection

- Sort by modified time or extracted frontmatter date fields

- Paginated results (25 per page)

- Click any document to view content

- URL query state (

/browse?collection=...,/doc?uri=...) updates reactively on in-app navigation and browser back/forward - Breadcrumb navigation within collections

- Edit and delete actions on each document

AI Answers

The Ask page provides RAG-powered Q&A:

- Enter your question

- GNO runs hybrid search

- Local LLM synthesizes answer from top results

- Citations link to source documents

Knowledge Graph

The Graph page (/graph) provides an interactive visualization of document relationships:

Features:

- Force-directed graph using react-force-graph-2d

- Nodes represent documents, edges represent links

- Three edge types: wiki links, markdown links, similarity edges

- Collection filter dropdown

- Similarity toggle (when embeddings available)

- Truncation warning when graph exceeds limits

- Click any node to navigate to that document

Navigation:

- Dashboard “Graph” button or

/graphURL - Zoom with scroll wheel

- Pan by dragging background

- Click node to open document view

Filtering:

- Collection: Filter to single collection or view all

- Similarity: Toggle similarity edges (requires embeddings)

- Isolated nodes (no links) are hidden by default

Note: When filtering by collection, node degrees may reflect links to documents outside the current view (used for importance ranking).

Visual Indicators:

- Node size indicates degree (more connections = larger)

- Wiki links shown as solid lines

- Similarity edges shown as dashed lines

- Hover reveals document title

Document Sidebar

The document view includes a collapsible sidebar with link information:

Backlinks Panel: Shows documents that link TO the current document. Useful for seeing what notes reference this one, enabling Zettelkasten-style navigation.

Outgoing Links Panel: Shows links FROM the current document to other documents.

- Wiki links:

[[Note Name]] - Markdown links:

[text](path.md) - Broken links shown with red indicator when target doesn’t exist

Related Notes Panel: Shows semantically similar documents based on vector similarity. Toggle on/off, with similarity scores shown as percentage bars. Great for discovering connections you didn’t know existed.

Wiki Link Autocomplete

When editing documents, type [[ to trigger wiki link autocomplete:

- Fuzzy matching against all indexed documents

- Cross-collection suggestions with

collection:prefix - Keyboard navigation: ↑/↓ to select, Enter to insert, Escape to cancel

- “Create [[query]]” option to create new notes

- Maximum 8 suggestions shown

Tags

Tags provide hierarchical classification for your documents. Use the Search page sidebar and document views to filter and manage tags.

Tag Format

Tags follow a simple grammar:

- Lowercase letters, numbers, hyphens, dots

- Hierarchical with

/separator (e.g.,project/web,status/in-progress) - Unicode letters supported

Search Page Tag Facets

The Search page includes a sidebar with tag facets:

- Browse tags: View all tags grouped by hierarchy

- Filter by tag: Click a tag to filter search results

- Active filters: Tags shown as chips above results

- Clear filters: Click chip X or “Clear all” to remove

Quick Capture Tags

When creating notes via Quick Capture (N):

- Fill in title and content

- Add tags using the tag input field

- Type to search existing tags (autocomplete)

- Press Enter to add new tags

- Click X on chips to remove

Document View Tags

From any document view:

- See current tags displayed as badges

- Click Edit to modify tags

- Use the tag input with autocomplete

- Click Save to update

Tag changes are saved to the document’s frontmatter (for markdown files).

Configuration

Command Line Options

gno serve [options]

| Flag | Description | Default |

|---|---|---|

-p, --port <num> |

Port to listen on | 3000 |

--index <name> |

Use named index | default |

Environment Variables

| Variable | Description |

|---|---|

NODE_ENV=production |

Disable HMR, stricter CSP |

GNO_VERBOSE=1 |

Enable debug logging |

HF_HUB_OFFLINE=1 |

Offline mode: use cached models only |

GNO_NO_AUTO_DOWNLOAD=1 |

Disable auto-download (allow explicit gno models pull) |

Security

The Web UI is designed for local use only:

| Protection | Description |

|---|---|

| Loopback only | Binds to 127.0.0.1, not accessible from network |

| CSP headers | Strict Content-Security-Policy on all responses |

| CORS protection | Cross-origin requests blocked |

| No external resources | No CDN fonts, scripts, or tracking |

| Path traversal guard | Write operations validate paths stay within root |

Warning: Do not expose

gno serveto the internet. It has no authentication.

Pro tip: Want remote access? Use a tunnel:

- Tailscale Serve: Expose to your Tailnet

- Cloudflare Tunnel: Free tier with auth

- ngrok: Quick setup, supports basic auth

Architecture

Browser

│

▼

┌─────────────────────────────────────┐

│ Bun.serve() on 127.0.0.1:3000 │

│ ├── React SPA │

│ │ ├── Dashboard (/, stats) │

│ │ ├── Search (/search) │

│ │ ├── Browse (/browse) │

│ │ ├── DocView (/doc) │

│ │ ├── Editor (/edit) │

│ │ ├── Collections (/collections) │

│ │ ├── Ask (/ask) │

│ │ └── Graph (/graph) │

│ └── REST API (/api/*) │

├─────────────────────────────────────┤

│ ServerContext │

│ ├── SqliteAdapter (FTS5) │

│ ├── EmbeddingPort (vectors) │

│ ├── GenerationPort (answers) │

│ └── RerankPort (reranking) │

└─────────────────────────────────────┘

Troubleshooting

“Port already in use”

gno serve --port 3001

# Or find and kill the process:

lsof -i :3000

kill -9 <PID>

“No results” in search

gno status

gno ls

# If empty, run:

gno index

AI answers not working

gno models list

gno models pull

Editor not loading content

Refresh the page. If content still doesn’t appear, check browser console for errors.

Changes not saving

- Check browser console for API errors

- Verify collection folder has write permissions

- Check disk space

API Access

The Web UI is powered by a REST API. See API Reference for details.

# Create document

curl -X POST http://localhost:3000/api/docs \

-H "Content-Type: application/json" \

-d '{"collection": "notes", "relPath": "new-note.md", "content": "# Hello"}'

# Update document

curl -X PUT http://localhost:3000/api/docs/abc123 \

-H "Content-Type: application/json" \

-d '{"content": "# Updated content"}'

# Get outgoing links

curl http://localhost:3000/api/doc/abc123/links

# Get backlinks (who links to this doc)

curl http://localhost:3000/api/doc/abc123/backlinks

# Get similar documents

curl "http://localhost:3000/api/doc/abc123/similar?limit=5&threshold=0.5"